Abstract

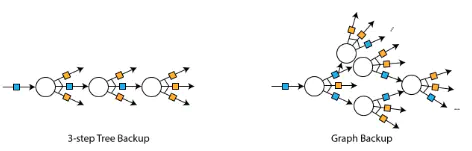

Bootstrapped value estimation has become a widely adopted ingredient for modern reinforcement learning algorithms. These methods compute a target value based on observed data and predictions for future values. The approximation error of the target value, which comes from stochastic dynamics and inaccurate predictions, can significantly affect the data efficiency of RL algorithms. Multi-step methods, such as n-step Q learning and TD(lambda), leverage the chain structure of the data, alleviating the effect of inaccurate predictions and allowing credit assignment across a longer time horizon. However, the main limitation of such multi-step methods is that they fail to exploit the graph structure of certain MDPs by only treating each trajectory independently, resulting in an inadequate estimate of the target value that misses the intersections between multiple trajectories. In this paper, we propose to treat the transition data of an MDP as a graph, and define a novel backup operator exploiting this graph structure. Comparing to multi-step backup, our graph backup method allows counterfactual credit assignment, and can reduce the variance that comes from stochastic environment dynamics. Our empirical evaluation on MiniGrid and Minatar shows graph backup can greatly improve data efficiency compared to one-step and multi-step backup.